J. Cent. South Univ. Technol. (2008) 15: 141-146

DOI: 10.1007/s11771-008-0028-5

![]()

An extended particle swarm optimization algorithm based on coarse-grained and fine-grained criteria and its application

LI Xing-mei(李星梅), ZHANG Li-hui(张立辉), QI Jian-xun(乞建勋), ZHANG Su-fang(张素芳)

(School of Business Administration, North China Electric Power University, Beijing 102206, China)

Abstract:

In order to study the problem that particle swarm optimization (PSO) algorithm can easily trap into local mechanism when analyzing the high dimensional complex optimization problems, the optimization calculation using the information in the iterative process of more particles was analyzed and the optimal system of particle swarm algorithm was improved. The extended particle swarm optimization algorithm (EPSO) was proposed. The coarse-grained and fine-grained criteria that can control the selection were given to ensure the convergence of the algorithm. The two criteria considered the parameter selection mechanism under the situation of random probability. By adopting MATLAB7.1, the extended particle swarm optimization algorithm was demonstrated in the resource leveling of power project scheduling. EPSO was compared with genetic algorithm (GA) and common PSO, the result indicates that the variance of the objective function of resource leveling is decreased by 7.9%, 18.2%, respectively, certifying the effectiveness and stronger global convergence ability of the EPSO.

Key words:

particle swarm; extended particle swarm optimization algorithm; resource leveling;

1 Introduction

From the enlightenment of life phenomenon, many intelligent optimization methods were invented to solve the complex optimization problems[1]. For example, genetic algorithm drew references from the development of biology species through inherit and natural selection[2]. Artificial immune systems simulated biological immune system’s learning and cognitive function[3]. Ant colony optimization algorithm imitated the ant groups’ conduct in the path choice and the transmission of information[4]. Particle swarm optimization algorithm simulated the birds’ coordinated mechanism of the individual and group in their migrate[5].

With the progress of computer science and intelligent optimization algorithm, the failure of traditional optimization algorithm can be well avoided. Compared with genetic algorithm(GA), particle swarm optimization algorithm is faster and the memory function can help it achieve the solution of complex combinatorial optimization better.

Particle swarm optimization algorithm has become a focus of intelligent optimization algorithm[4]. But in practical applications, because of the evolution mechanism of the algorithm itself, using particle swarm optimization(PSO) algorithm can easily trap into local mechanism and appear prematurely when the high dimensional complexity is analyzed[6]. Therefore, the improved strategy for solving this problem is proposed by introducing expansion factors, the flaws of optimizing the algorithm are overcome. High-dimensional, complex optimization problems such as power project resources leveling were selected to validate the algorithm optimization results. The improved PSO algorithm is obviously more effective as compared with the common PSO algorithm and GA.

2 Particle swarm optimization algorithm

Particle swarm optimization algorithm that was first mentioned by KENNEDY and EBERHART[4] sourced from the research of birds prey. This algorithm is a general heuristic searching technology and has been used in solving optimization problems[7]. A horde of birds are looking for food within an area randomly, and every bird knows how far the food is from his own position. So the simplest and most efficient strategy is searching for the nearest food.

The PSO algorithm can be used to solve numerous non-linear, non-differential as well as multi-peaks complex optimizing problems[8-9] and the implement- ation of this algorithm is extremely simple. Few parameters are needed to be adjusted. So it has the advantages of constringency fast and being easily implemented. For these reasons it has been developed rapidly. Many improved PSO algorithms have appeared and are used in various subjects and engineering areas[10-11].

First, the PSO algorithm will initialize the hordes of particles randomly in given solution space, of which the dimension is determined by the variables of the number of problems waiting to be optimized, and every particle will be given an initiate position and speed. Then through the iterative optimization method, every particle will update its own position and speed in the solution space by tracking two “extreme values” within every iterative process[12].

The mathematic description of the PSO algorithm could be demonstrated as follows.

All the particles updated their speed and position according to Eqn.(1) and (2).

![]() (1)

(1)

![]() (2)

(2)

where the subscript t denotes iterative times; r1,i(0,1) denotes the generated random number within 0 and 1; xt that determines the particle’s single iterative displacement in the searching space is a n-dimension vector of the position; pt is the position of current individual optimal particle; gt is the position of global optimal particle; c1 and c2 are learning parameters, commonly c1=c2=2; w is inertial weight, the algorithm will get larger and be apt to local searching when it is small. Commonly the value of w has powerful global searching ability when the value of w is initiated to be 0.9, and then with the iterative time linearly decreasing to 0.4; speed vt can be evaluated within vmax and vmin, and be adjusted to the limit value when it exceeds the bound; vmax is a constant that restricts the maximum speed (user defines). It is good for global searching when vmax is large, but there is likelihood of missing the most optimal answer, and it is good for local searching when vmax is small. Particles can search carefully in the particular area, but are easy to fall into local optimization.

3 Extended particle swarm optimization algorithm

3.1 Model of extended particle swarm optimization algorithm

In the basic PSO algorithm, updating each particle is influenced by two “extreme values” within every iterative process. In order to increase its global convergence of the algorithm and effectiveness, each particle can be updated by the impact of many other particles. According to the factors like space distance, fuzzy technology was used to distinguish impacts. Such a mechanism considering the impact of PSO multi-particle cooperation model is called the extended particle swarm optimization (EPSO) algorithm.

With regard to EPSO algorithm, in the process of the iterative optimization, particles contained more information. The basic formula of EPSO algorithm is shown as follows:

![]() (3)

(3)

and

![]() (4)

(4)

where Ψi=c1,ir1,i(0, 1) and ζi=c2,ir2,i(0, 1); the subscript t denotes iterative times, xt denotes the particle interspaces, vt denotet the particle speed, pt denotes the position of individual extreme value particle, ![]() denotes local extreme value particle and global extreme value particle, r1,i(0, 1) and r2,i(0, 1) are random between 0 and 1, c1,i and c2,i are control parameters. The comparison of Eqn.(3) and Eqn.(1) shows that EPSO algorithm takes full account of information contained in more particles in the process of iterative optimization, and it has stronger global convergence, according to the speed and position value of updating itself of optimal individual extreme particle. PSO algorithm is only a special case in EPSO algorithm in which m and n are equal to 1.

denotes local extreme value particle and global extreme value particle, r1,i(0, 1) and r2,i(0, 1) are random between 0 and 1, c1,i and c2,i are control parameters. The comparison of Eqn.(3) and Eqn.(1) shows that EPSO algorithm takes full account of information contained in more particles in the process of iterative optimization, and it has stronger global convergence, according to the speed and position value of updating itself of optimal individual extreme particle. PSO algorithm is only a special case in EPSO algorithm in which m and n are equal to 1.

3.2 Astringency of extended particle swarm optimization algorithm

As EPSO algorithm considers the information contained in more particles values, which are used in iterative optimization process, and more control parameters are introduced. It must be ensured that the choice of control parameters is appropriate to make the algorithm convergence. Now by testifying the convergence condition of EPSO algorithm, the control parameter setting strategy of the algorithm is proposed.

The recursive formula calculation of EPSO algorithm is as follows: taking Eqn.(3) into Eqn.(4) and from vt=xt-xt-1, yields

![]() (5)

(5)

In each iterative process, pt and ![]() are constants, Eqn.(5) can be

are constants, Eqn.(5) can be

(6)

(6)

where ![]() ,

, ![]() ,

, ![]()

![]() Based on the above matrix latent root solution, the solution of the recursive Eqn.(5) is:

Based on the above matrix latent root solution, the solution of the recursive Eqn.(5) is:

![]() (7)

(7)

where α and β are matrix latent roots,

![]()

![]() and

and ![]() , k1, k2 and k3 at each iteration process are constants, and can be recursively calculated based on the initial random distribution of the particle position.

, k1, k2 and k3 at each iteration process are constants, and can be recursively calculated based on the initial random distribution of the particle position.

The convergence of EPSO algorithm and the condition of![]() are constants:

are constants:

if ![]() ,

,

then ![]() ,

,

if ![]()

then ![]() is emanative, θ and σ are constants at each iterative step. When

is emanative, θ and σ are constants at each iterative step. When ![]() ,

, ![]() ≤1, it can ensure that the algorithm is convergence. Therefore, the control parameter selection can guarantee the convergence of the algorithm.

≤1, it can ensure that the algorithm is convergence. Therefore, the control parameter selection can guarantee the convergence of the algorithm.

The above convergence analysis does not consider the probability factors triggered by the random in the algorithm formula. Due to ![]()

![]()

![]() the controls parameters ψ and ζ at iterative process of swarm optimization algorithm are as follows:

the controls parameters ψ and ζ at iterative process of swarm optimization algorithm are as follows:

![]() (8)

(8)

and

![]() (9)

(9)

Therefore, ![]() and

and ![]()

![]() can be got in instance when considering probability stochastic factors at the process of swarm iterative, and make E[Ψ]∈(0, 1.496 18) and E[ζ]∈(0, 1.496 18) satisfy convergence rule of algorithm.

can be got in instance when considering probability stochastic factors at the process of swarm iterative, and make E[Ψ]∈(0, 1.496 18) and E[ζ]∈(0, 1.496 18) satisfy convergence rule of algorithm.

3.3 Setting guidelines of control parameters in extended particle swarm optimization algorithm

Different from the PSO algorithm, ψ is the sum of the m optimal weights of individual extreme value particles, ζ is the sum of the![]() local maximum particle or particles overall extreme weights. The degree of influence of various particles varies with their different solutions for optimality. The smaller the fitness, the greater the impact. For the characteristic of EPSO algorithm, options for the maximum particle weights in the cases of coarse-grained and fine-grained are given as follows.

local maximum particle or particles overall extreme weights. The degree of influence of various particles varies with their different solutions for optimality. The smaller the fitness, the greater the impact. For the characteristic of EPSO algorithm, options for the maximum particle weights in the cases of coarse-grained and fine-grained are given as follows.

1) Fine-grained criterion

,

,  (10)

(10)

where fi and ![]() are individual extreme particle and local extreme value particle, respectively. In the fine-grained criterion, the influence of each particle on other particles in its iterative process is based on its optimal performance. Considering the iterative process of random probability circumstances, the value of c can be 2×1.496 18.

are individual extreme particle and local extreme value particle, respectively. In the fine-grained criterion, the influence of each particle on other particles in its iterative process is based on its optimal performance. Considering the iterative process of random probability circumstances, the value of c can be 2×1.496 18.

2) Coarse-grained criterion

![]() ,

,![]() , c=1.496 18/2 (11)

, c=1.496 18/2 (11)

Optimum particles have greater impact. Due to ![]() process of calculating

process of calculating ![]() ,the algorithm of

,the algorithm of ![]() is as the same as

is as the same as![]() Therefore, Eqn.(11) can guarantee the convergence of the particles. Considering the iterative process of random probability circumstances, the value of c can be 1.496 18.

Therefore, Eqn.(11) can guarantee the convergence of the particles. Considering the iterative process of random probability circumstances, the value of c can be 1.496 18.

In iterative process, factors considering random probability under the influence of a control parameter admit to the expectations E randomness and probability factors without considering the impact of the control parameters for the relationship between expectations is E=2E′. According to Eqn.(3) of EPSO algorithm, considering the probability of the random parameter selection factors iterative process will allow the particles to get greater expectations of fly distances |v|, so it is propitious for particles to jump out of local convergence regional, making algorithm not converge to the local optimal solution. However, in the parameters of options considering the influence of probability random factors, particle swarm will oscillate in the iterative process, so after a certain number of iterations, the impact of probability random factors should not be considered to accelerate the convergence of all particles in particles swarm.

4 Improved particle swarm optimization algorithm to solution of resource leveling

The planning and implementation of power plant project have the characters of “within long period, large resource devotion, and resource imbalance”. Project resource distribution is not an ideal state, but is “multi-peak” and “multi-valley”. This imbalance increases investment risks, which may cause waste of resources. Therefore, it is urgently necessary to make a reasonable adjustment in the network planning process in order to achieve a balanced allocation of resources and to solve the problems. Resource leveling can be classified into a mathematical model with a class of nonlinear programming, but there are limitations in study. In large-scale networks, the CPU time for solving such problems increases exponentially with the rising of the number of network nodes. As for different network structures and different parameters, the influence on resources leveling is not the same. Network parameters can be considered as particles. Expanded PSO algorithm considers about the optimal individual particles, and the weighted processing identifies global optimal program. It avoids the process of optimization in a “local optimization, and global non-gifted” result. Therefore, the application of particle swarm algorithm in resources leveling can extend profound manifestation of the superiority of the algorithm.

4.1 Mathematical model of resource leveling optimization

Under the condition of determining the time parameters of initial plan and resource intensity of every procedure, making the minimization of resource intensity variance be goal, and the decided project period duration and the proceeding relationship of every procedure be constraints, the mathematics optimization model is as follows:

![]()

When the procedures time and resources are determined, the optimization model could be simplified as

![]()

The constraints are![]() ,

,

![]()

max{TS(i)+T(i)}≤TL(i), TE(i)≤TS(i)≤TL(i),

i=1, 2, …, N; t=1, 2, …, T.

where N is the activities number, T is the planned project duration, Ri(t) is the resource intensity of activity i, ![]() , TE(i) is the earliest start time of the

, TE(i) is the earliest start time of the

activity i, TL(i) is the latest start time of the activity i, Ts(i) is the actual start time of the activity i and T(i) is the duration of the activity i.

4.2 Representations of particle position vector, solution space and speed

The goal of resources leveling is to find a network plan, of which the starting time parametric portfolio can make the consumption of resources achieve a balanced state, namely to make the variance of the resource consumed smallest. Therefore, the position of the particle can be seen as the starting time of all activities, and the solution space is a set of the starting time of all activities.

Each position vector of particle expressed a solution, assuming the ith particle location can be expressed as TS(i)=(TS(i1), TS(i2), …, TS(in))(i=1, 2, …, N), N is the particle number, n is the number of activities, in which TS(i1), TS(i2), …, TS(in) represent the starting time of n activities.

According to the above formulas, Ri(t), objective value Fi and individual optimal particles pi,t=(pi1, pi2, …, pim) are proposed. As for the entire particles space, there is a global optimal particle g=(g1, g2, …, gn). Then the velocity can be expressed according to the improved particle swarm algorithm as follows:

![]()

![]() (12)

(12)

4.3 Algorithm description

The searching steps of solution are shown as follows:

1) Initializing the particle swarm, including the population size N, position of each particle TS(i) and velocity vi etc. Initial particle position TS(i)=(TS(i1), TS(i2),…, TS(in)) can randomly select N particles that meet the constraints. As for the initial velocity values, the length of vi is set as random number in the range of [-2, 2].

2) According to formulas and the simple model

![]() , the corresponding objective value of

, the corresponding objective value of

every particle Fi is calculated.

3) For each particle, comparing the objective value Fi with individual extreme value pi, if Fi>pi, then pi is replaced with Fi.

4) For each particle, comparing the objective value Fi with the global extreme value g, if Fi>g, then g is replaced with Fi.

5) According to Eqns.(3) and (4), the velocity vi and the location of particles xi are updated.

6) Withdraw from the cycle if the end conditions (the largest cycle number or the cycle number achieves the optimal values) are met, otherwise back to 2).

5 Example analysis

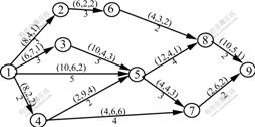

In a project, the network graph is shown in Fig.1, in which above the arrow line is the combination of resources required daily(R1, R2, R3), below the arrow line is the duration T(i) of the activity i. The sequence number of activities are:1—2:activity 1; 1—3:activity 2; 1—4:activity 3; 1—5:activity 4; 2—6:activity 5; 3—5:activity 6; 4—5:activity 7; 4—7:activity 8; 5—7:activity 9; 5—8:activity 10; 6—8:activity 11; 7—9:activity 12; 8—9:activity 13.

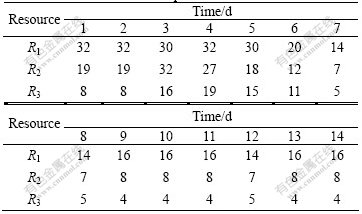

The use of resources before optimization is shown in Table 1.

Fig.1 Project network graph

Table 1 Resource use before optimization

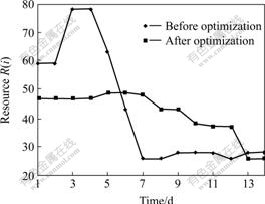

Based on Table 1, the trend curve of the resource use was proposed (see Fig.2).

From Fig.2, it can be seen obviously that the resource use of prophase is more efficient than anaphase of the project. Therefore, the resource is not level.

The number of particles can be given as 60 when using improved PSO. The actual starting time of the ith particle is TSi=(TSi1, TSi2, …, TSin), which meet certain restrictions. The value of initial velocity vi is set as the random number in the range of [-2, 2]. The maximum velocity of every activity is set to ensure the beginning time of the activity, which would not fly over the latest starting time. Meanwhile, the maximum velocity of activity particle needs to be determined according to the specific requirement of the program. After initialization, the particles with the objective function are begun to be evaluated and the current Fi, pi and g can be got. Then update the particles, repeat this step and stop until the conditions are satisfied. The end condition is that the largest cycle number is 1 000.

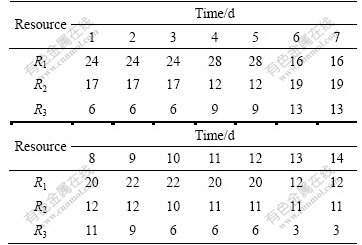

By using MATLAB7.1 software, the calculation result is TS=(0, 0, 3, 0, 7, 3, 5, 5, 9, 8, 10, 12,12), which means the beginning time of the activities. And TS=(0, 0, 0, 0, 3, 3, 2, 2, 8, 8, 6, 11, 12) before the resource is optimized. On computation, the variance corresponding to F=889.153 8 is σ2=13.720 2. The variance corresponding to F=765.307 7 is σ2=3.645 393 after optimization. The resource allocation is more balanced than pre-optimization.

Based on Table 2, the trend curve of the resource use was proposed (see Fig.2).

Table 2 Resource use after optimization

Fig.2 Trend curve of resource use after optimization

From Fig.2, the resource use after optimization is more balanced than before optimization.

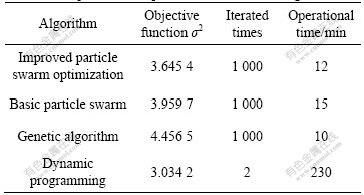

Meanwhile, the data quoted using dynamic planning, GA, as well as non-heuristic algorithm were calculated in this paper. Table 3 shows that the improved PSO improves more significantly than traditional dynamic programming methods and achieves a better result by comparing with the results of the four optimization algorithm.

Table 3 Comparison of improved PSO with other algorithms

6 Conclusions

1) As the particle swarm algorithm has the deficiency of local optimization, the algorithm’s performance is improved by increasing the convergence factors, trying to speed up the convergence and to avoid falling into the local optimal shortcoming.

2) The improved particle swarm hybrid optimization algorithm is adopted to compare and analyze three traditional methods, namely genetic algorithm, original particle swarm optimization algorithm and dynamic programming algorithm; the results demonstrate the superiority of the improved algorithm.

3) Based on the result analysis, the extended particle swarm optimization being applied to the problem of resource leveling can make the resource more balance than ever. Furthermore, the applications of this algorithm are to be studied further.

References

[1] OSMAN I H. A tabu search procedure based on a random roulette diversification for the weighted maximal planar graph problem[J]. Computers and operations Research, 2006, 33(9): 2526-2546. (in Chinese)

[2] Hegazy T. Optimization of resource allocation and leveling using genetic algorithms[J]. Journal of Construction Engineering and Management, 1999(6): 167-175.

[3] GUO Yan, NING Xuan-xi. Using genetic algorithms for multi-project resource balance[J]. Systems Engineering: Theory and Practice, 2005(10): 78-82. (in Chinese)

[4] KENNEDY J, EBERHART R C. Particle swarm optimization[C]// Proceedings of IEEE International Conference on Neural Networks. Piscataway, NJ: IEEE Press, 1995: 1942-1948.

[5] EBERHART R C, SHI Y. Particle swarm optimization: Developments, applications and resources[C]// Proceedings of 2001 Congress Evolutionary Computation. Piscataway, NJ: IEEE Press, 2001: 81-86.

[6] PARSOPOULOS K E, VRAHATIS M N. Particle swarm optimization method for constrained optimization problems[C]// Intelligent Technologies: from Theory to Applications. Amsterdam: IOS Press, 2002: 214-220.

[7] EBERHART R C, KENNEDY J. A new optimizer using particle swarm theory[C]// Proc on 6th International Symposium on Micromachine and Human Science. Piscataway, NJ: IEEE Service Center, 1995, 39-43.

[8] KENNEDY J. The particle swarm: Social adaptation of knowledge[C]// IEEE International Conference on Evolutionary Computation. Piscataway, NJ: IEEE Service Center, 1997: 303-308.

[9] WANG Ding-wei. Colony location algorithm for assignment problems[J]. Journal of Control Theory and Applications, 2004, 2(2): 111-116.

[10] HOPFIELD J J, TANK D W. Neural computation of decision in optimization problems[J]. Biological Cybernetics, 1985, 52: 141- 152.

[11] LI Xiang, YANG Shang-dong, QI Jian-xun, YANG Shu-xia. Improved wavelet neural network combined with particle swarm optimization algorithm and its application[J]. Journal of Central South University of Technology, 2006, 13(3): 256-259.

[12] LI Xiang, CUI Ji-feng, QI Jian-xun, YANG Shang-dong. Energy transmission nodes based on Tabu search and particle swarm hybrid optimization algorithm[J]. Journal of Central South University of Technology, 2007, 14(1): 144-148.

Foundation item: Project(70671040) supported by the National Natural Science Foundation of China

Received date: 2007-08-02; Accepted date: 2007-09-16

Corresponding author: LI Xing-mei, Doctoral candidate; Tel: +86-10-51963744; E-mail: xingmeil@163.com

- An extended particle swarm optimization algorithm based on coarse-grained and fine-grained criteria and its application